Detect DeepFakes: How to counteract misinformation created by AI.

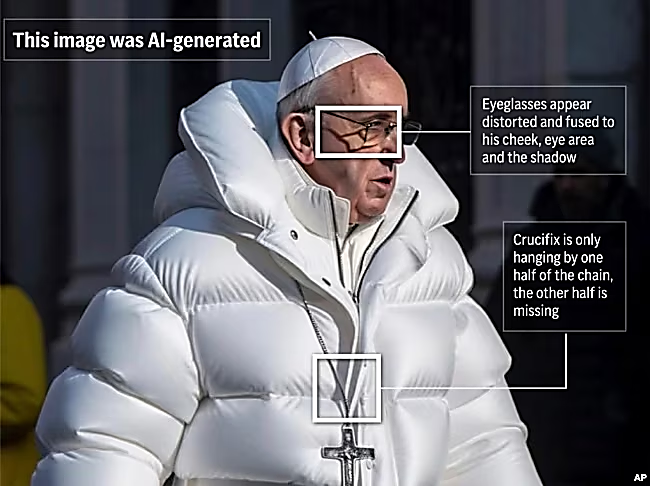

Voice of America recently published this image to teach its audience how to spot manipulated images.

Everyone—but business leaders more so—needs to know how to detect DeepFakes.

You’ve all likely read about the big DeepFake scam that recently made headlines.

Scammers used DeepFakes in a video call and tricked an employee into sending them more than $25 million, CNN reported. The victim received an email that was set up to look like it was from the company’s CFO, which he found to be suspicious because it instructed him to transfer a large amount of money to an offshore account. However, the perpetrators allayed his doubts by inviting him to a video call and chatting with him “face-to-face”… or so he thought. As it turned out, the CFO and other colleagues he met there were all DeepFakes.

Today, AI has advanced to a point where DeepFakes has become incredibly believable.

It’s not the existence of DeepFakes, so much as what DeepFakes are being used for, that is alarming. These manipulated images and footage are being used to defame people, like pop artist Taylor Swift, who was enraged by explicit DeepFake images of her that were circulated publicly early in 2024. They’re also being used to create havoc during elections, like the DeepFake video of KT Rama Rao, a leader of the Bharat Rashtra Samiti, asking people to vote for the Congress Party. In the US, a DeepFake voice recording of US President Joe Biden asked people to refrain from voting in the presidential primary election.

And, of course, DeepFakes are being used in scams, like the $25 million scam we discussed at the start of this blog.

So, what is DeepFake exactly? As the name suggests, DeepFake is a term used to describe audio, videos, and images that are faked using deep learning and AI technologies.

As you can imagine, with AI’s current capabilities, it is easy to use machine learning and train an AI model to talk, emote, and use mannerisms that mimic the target personality by observing hours of actual footage of them.

The ammo: Tech that detects DeepFakes

Many tech firms are racing to create tech that will help individuals and organizations recognize DeepFakes. Market leaders Intel Corp., Microsoft, and Meta Platforms Inc. have built tools that promise to root out DeepFakes. Intel’s FakeCatcher, launched in 2022, reportedly detects DeepFake videos with a 96% accuracy rate within milliseconds. Meta has not only developed methods to identify DeepFakes but has also announced that it will start labeling AI-generated DeepFake images on Facebook and Threads. Meta hopes the move will also pressure its peers into doing the same.

Some of these market leaders even took up a public initiative, the Kaggle DeepFake Detection Challenge, that looked to the public for “an algorithmic answer to the question of detecting [deep]fakes.” The description on the Kaggle Website said, “AWS, Facebook, Microsoft, the Partnership on AI’s Media Integrity Steering Committee, and academics have come together to build the DeepFake Detection Challenge. The goal is to spur researchers worldwide to build innovative new technologies that can help detect DeepFakes and manipulated media.”

Let’s look at a handful of other tools and their audiences and use cases:

- DeepWare AI allows users to “scan a video” to determine if it has been “synthetically manipulated.” Users simply need to upload a video to their site (making it accessible to the public) and hit the scan button. They also offer on-premise and API solutions.

- Sensity AI offers a full suite of fraud detection services, DeepFake image, video, audio, and document detection. Their focus sectors are banking, fintech, gaming, transportation, defense, and intelligence, which indicates the pervasive nature of the dangers posed by DeepFakes.

- The Sentinel targets governments, media, and defense agencies to “help protect democracies from disinformation campaigns, synthetic media” and misinformation operations.

- TrueMedia is a non-profit with a DeepFake audio and video detection tool trained on hours of DeepFake footage. Their focus is on identifying political DeepFakes on social media.

- Detect Fakes is a forum that aims to answer questions about DeepFakes. The site displays thousands of curated, high-quality DeepFake videos publicly.

The know-how: Telltale signs visible to the naked eye

One of the solution providers in the list above, TrueMedia, has made an important observation: The cost of creating DeepFakes has plunged drastically. This means the world can expect to see them disseminated in much larger volumes. So, how do businesses tackle this looming problem? How do you protect your organization and avoid becoming a headline like the infamous $25 million DeepFake scam?

Update methods to authenticate and verify images, audio, and video content should be a primary move, alongside employee training and education. Most companies have already honed a culture of vigilance around old-school phishing and social engineering methods as part of proactive safeguards or regulatory compliance protocols. Employees need to, similarly, be educated on the new and growing threat of DeepFakes. The idea is to teach people to watch for inconsistencies, mainly when there is movement.

Here are some tips for detecting DeepFake videos—remember, DeepFakes will very likely have the same images that make AI-generated images reasonably easy to recognize:

- Shadows: Are shadows correctly positioned around the eyes and below the chin (and elsewhere in the photo)? DeepFakes might lack an accurate representation of natural lighting. Observe the eyebrows—do they look normal; are they animated as they always are?

- Glare: Of course, you need a bespectacled subject in the video for this one to work. Is there a noticeable glare from their glasses? Alternatively, is there no reflection at all (that’s not exactly possible!) Does the glare angle adjust with the person’s movements? DeepFakes often struggle to replicate authentic lighting effects.

- Skin texture: Take note of the skin texture on the cheeks and forehead. Does it appear either excessively smooth or wrinkled? Is aging consistent across the skin, hair, and eyes? DeepFakes may exhibit inconsistencies in these aspects, much like the strangely wrinkled foreheads you’ll notice in AI-generated images.

- Facial hair: Not just eyebrows but beards, mustaches, etc. Do these features look genuine, or have they been digitally added or removed? Despite attempts, DeepFakes may not consistently achieve seamless facial hair alterations.

- Moles: Check for a realistic representation of facial moles. Is the mole present? Is it where it is supposed to be? Does the mole look real?

- Eyes: Pay attention to blinking patterns. Does the frequency of blinking align with natural behavior?

- Mouth: Observe lip movements closely. Do the lip movements appear realistic? Some DeepFakes rely on lip-syncing.

- Edges: Look around the subject’s chin and other edges of the face and head. Any blurring or distortion could be an indication of a DeepFake video.

- Logic: Other than the physical signs listed above, people should also simply ask if the subject’s actions are logical, expected, or part of their usual behavior as a critical move in detecting DeepFakes. In the $25 million DeepFake scam, the duped employee was, in fact, initially suspicious because he was asked to transfer funds to an offshore account, which was an irregularity.

A lot of the same rules apply to DeepFake images.

Meanwhile, you might be able to detect DeepFake audio by listening for:

- Slurring on specific words and phrases (sure, the sender could be tired, sick, or under the influence, but then they should slur almost continuously)

- Static (though this could admittedly be chalked down to poor microphone quality or an unstable network connection)

- Unnaturalness, which some refer to as “less full” or “just not right,” especially if it is a voice you recognize.

The science: How the DeepFake detection tech works

Some of us need more convincing than others. If you’re thinking, “How exactly do detectors spot DeepFakes? What makes them so foolproof? Can I really trust the detector?” This section is just for you. (If you’re already convinced and don’t feel like diving deeper, scroll on…)

Intel’s FakeCatcher, for example, uses multiple methods, some of which we’ve detailed below:

In one method, Intel uses facial color changes related to blood flow patterns to determine authenticity. Focusing solely on pertinent facial color cues bypasses potential manipulative signals present in videos. PPG signals, omnipresent across the skin, ensure natural color fluctuations, maintain coherence across facial regions, and achieve a remarkable 91% accuracy rate in testing. PPG signals cannot be eliminated by changing lighting, but by throwing a bit of generative AI in there, the anomalies start showing up.

Another of Intel’s methods relies on facial motion, which does not blend well with facial structure when manipulated with generative technology. This method scored 97 percent in accuracy tests and zeroed in on the algorithm that created the DeepFake.

Meanwhile, Luisa Verdoliva, a researcher in Naples, uses biometric signatures to differentiate between authentic versus deepfaked audio and images. The system was trained on images from nearly 1500 cameras, which allowed it to detect anomalies in images captured by new models. It is also able to spot images altered using good old Photoshop techniques.

The future: More wrestling ahead

DeepFake creation and detection is an arms race in which each side builds on the other. Given a new detection method, someone can often train a generation algorithm to better fool it. For now, companies can enable and practice vigilance, ensure that awareness of stakeholders and employees is up-to-date, and invest in DeepFake detection tools relevant to their context… while watching this space for further updates.

Worried about DeepFakes ruining customer-facing experiences and assets? Your fears aren’t unfounded; the risk is real! Work with an experienced company like magineu that puts security at the forefront even while designing creative experiences for your key stakeholders and customers.